Update README.md

Browse files

README.md

CHANGED

|

@@ -1,3 +1,170 @@

|

|

| 1 |

-

---

|

| 2 |

-

|

| 3 |

-

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

---

|

| 2 |

+

library_name: transformers

|

| 3 |

+

license: apache-2.0

|

| 4 |

+

license_link: https://huggingface.co/Qwen/Qwen3-Coder-Next/blob/main/LICENSE

|

| 5 |

+

pipeline_tag: text-generation

|

| 6 |

+

---

|

| 7 |

+

|

| 8 |

+

# Qwen3-Coder-Next

|

| 9 |

+

<a href="https://chat.qwen.ai/" target="_blank" style="margin: 2px;">

|

| 10 |

+

<img alt="Chat" src="https://img.shields.io/badge/%F0%9F%92%9C%EF%B8%8F%20Qwen%20Chat%20-536af5" style="display: inline-block; vertical-align: middle;"/>

|

| 11 |

+

</a>

|

| 12 |

+

|

| 13 |

+

## Highlights

|

| 14 |

+

|

| 15 |

+

Today, we're announcing **Qwen3-Coder-Next**, an open-weight language model designed specifically for coding agents and local development. It features the following key enhancements:

|

| 16 |

+

|

| 17 |

+

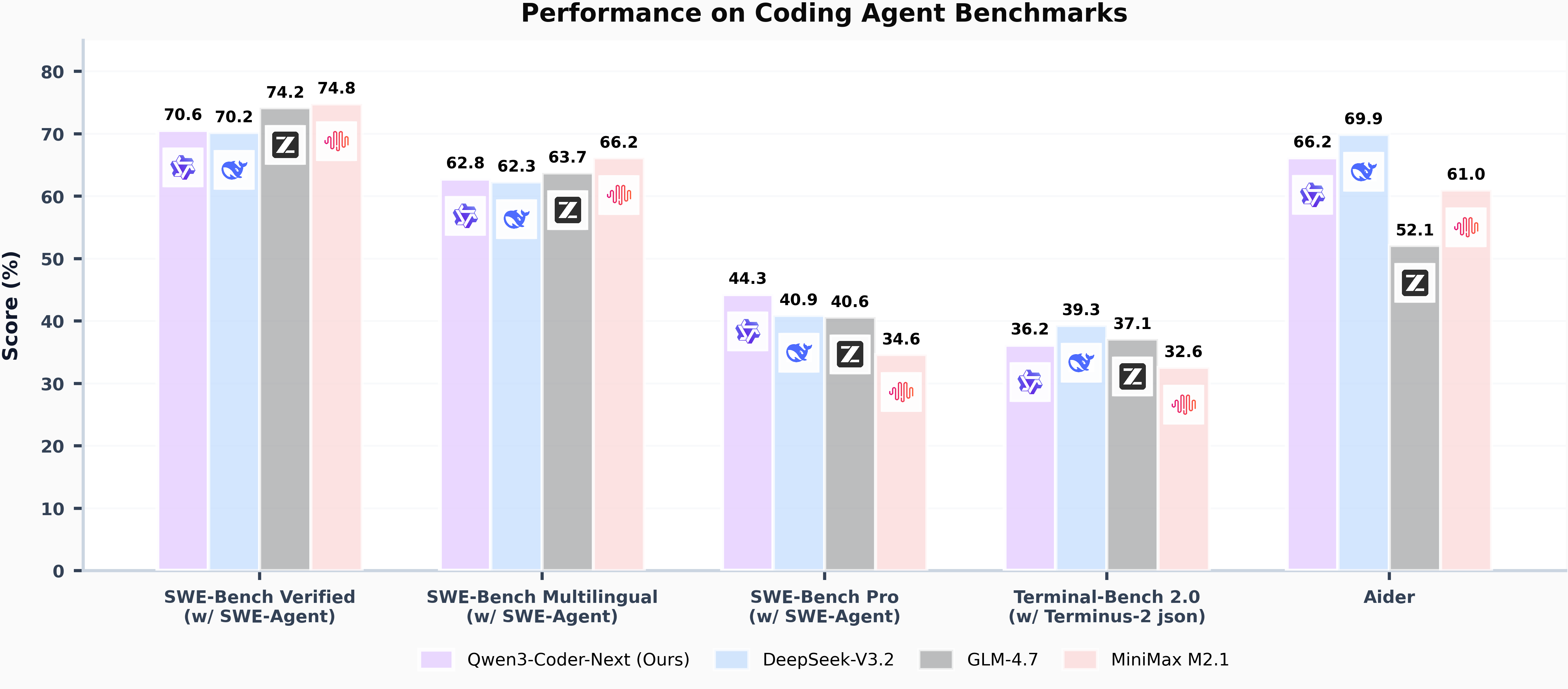

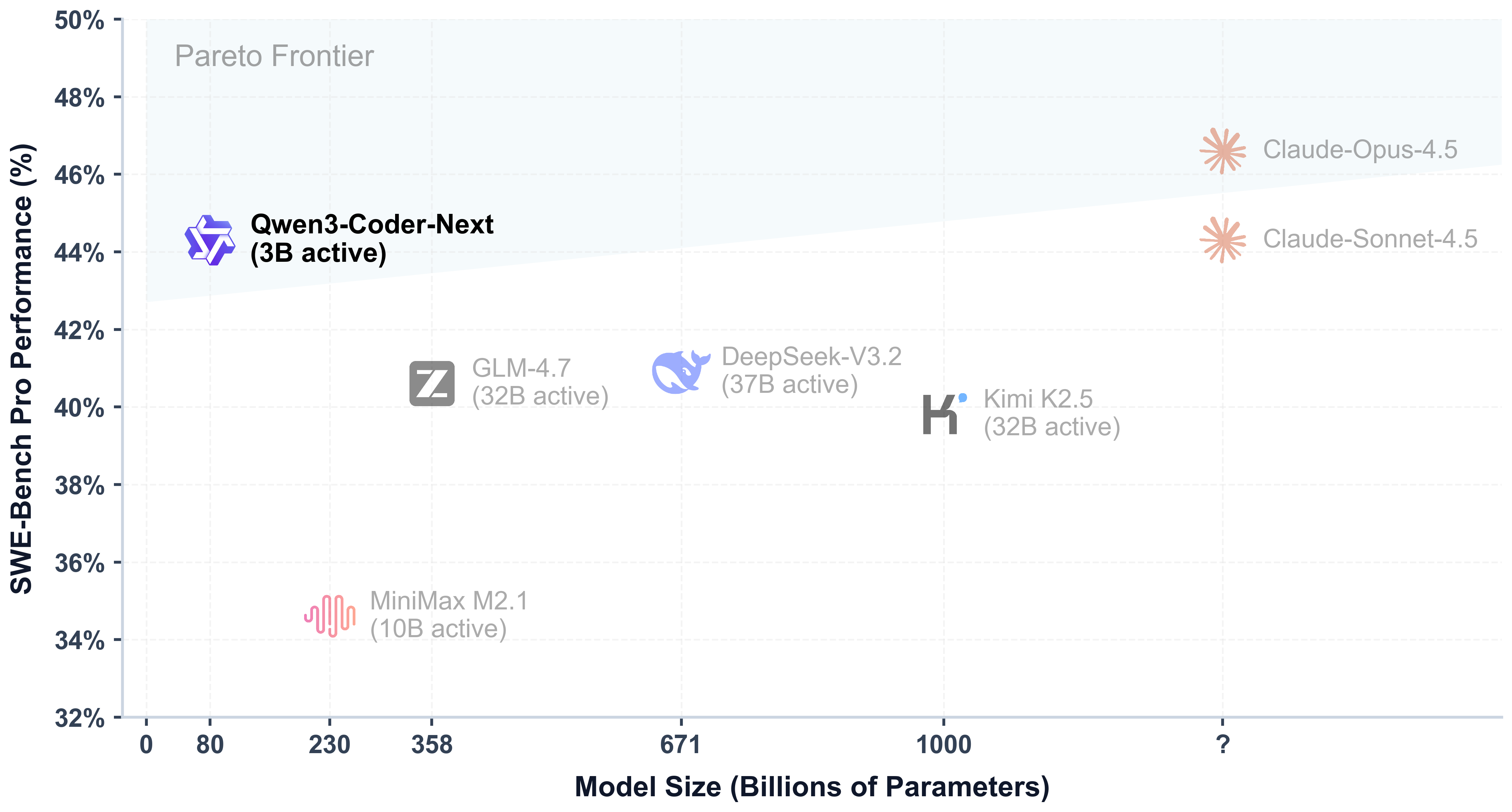

- **Super Efficient with Significant Performance**: With only 3B activated parameters (80B total parameters), it achieves performance comparable to models with 10–20x more active parameters, making it highly cost-effective for agent deployment.

|

| 18 |

+

- **Advanced Agentic Capabilities**: Through an elaborate training recipe, it excels at long-horizon reasoning, complex tool usage, and recovery from execution failures, ensuring robust performance in dynamic coding tasks.

|

| 19 |

+

- **Versatile Integration with Real-World IDE**: Its 256k context length, combined with adaptability to various scaffold templates, enables seamless integration with different CLI/IDE platforms (e.g., Claude Code, Qwen Code, Qoder, Kilo, Trae, Cline, etc.), supporting diverse development environments.

|

| 20 |

+

|

| 21 |

+

|

| 22 |

+

|

| 23 |

+

|

| 24 |

+

|

| 25 |

+

## Model Overview

|

| 26 |

+

|

| 27 |

+

**Qwen3-Coder-Next** has the following features:

|

| 28 |

+

- Type: Causal Language Models

|

| 29 |

+

- Training Stage: Pretraining & Post-training

|

| 30 |

+

- Number of Parameters: 80B in total and 3B activated

|

| 31 |

+

- Number of Parameters (Non-Embedding): 79B

|

| 32 |

+

- Hidden Dimension: 2048

|

| 33 |

+

- Number of Layers: 48

|

| 34 |

+

- Hybrid Layout: 12 \* (3 \* (Gated DeltaNet -> MoE) -> 1 \* (Gated Attention -> MoE))

|

| 35 |

+

- Gated Attention:

|

| 36 |

+

- Number of Attention Heads: 16 for Q and 2 for KV

|

| 37 |

+

- Head Dimension: 256

|

| 38 |

+

- Rotary Position Embedding Dimension: 64

|

| 39 |

+

- Gated DeltaNet:

|

| 40 |

+

- Number of Linear Attention Heads: 32 for V and 16 for QK

|

| 41 |

+

- Head Dimension: 128

|

| 42 |

+

- Mixture of Experts:

|

| 43 |

+

- Number of Experts: 512

|

| 44 |

+

- Number of Activated Experts: 10

|

| 45 |

+

- Number of Shared Experts: 1

|

| 46 |

+

- Expert Intermediate Dimension: 512

|

| 47 |

+

- Context Length: 262,144 natively

|

| 48 |

+

|

| 49 |

+

**NOTE: This model supports only non-thinking mode and does not generate ``<think></think>`` blocks in its output. Meanwhile, specifying `enable_thinking=False` is no longer required.**

|

| 50 |

+

|

| 51 |

+

For more details, including benchmark evaluation, hardware requirements, and inference performance, please refer to our [blog](https://qwenlm.github.io/blog/qwen3-coder-next/), [GitHub](https://github.com/QwenLM/Qwen3-Coder), and [Documentation](https://qwen.readthedocs.io/en/latest/).

|

| 52 |

+

|

| 53 |

+

|

| 54 |

+

## Quickstart

|

| 55 |

+

|

| 56 |

+

We advise you to use the latest version of `transformers`.

|

| 57 |

+

|

| 58 |

+

The following contains a code snippet illustrating how to use the model generate content based on given inputs.

|

| 59 |

+

```python

|

| 60 |

+

from transformers import AutoModelForCausalLM, AutoTokenizer

|

| 61 |

+

|

| 62 |

+

model_name = "Qwen/Qwen3-Coder-Next"

|

| 63 |

+

|

| 64 |

+

# load the tokenizer and the model

|

| 65 |

+

tokenizer = AutoTokenizer.from_pretrained(model_name)

|

| 66 |

+

model = AutoModelForCausalLM.from_pretrained(

|

| 67 |

+

model_name,

|

| 68 |

+

torch_dtype="auto",

|

| 69 |

+

device_map="auto"

|

| 70 |

+

)

|

| 71 |

+

|

| 72 |

+

# prepare the model input

|

| 73 |

+

prompt = "Write a quick sort algorithm."

|

| 74 |

+

messages = [

|

| 75 |

+

{"role": "user", "content": prompt}

|

| 76 |

+

]

|

| 77 |

+

text = tokenizer.apply_chat_template(

|

| 78 |

+

messages,

|

| 79 |

+

tokenize=False,

|

| 80 |

+

add_generation_prompt=True,

|

| 81 |

+

)

|

| 82 |

+

model_inputs = tokenizer([text], return_tensors="pt").to(model.device)

|

| 83 |

+

|

| 84 |

+

# conduct text completion

|

| 85 |

+

generated_ids = model.generate(

|

| 86 |

+

**model_inputs,

|

| 87 |

+

max_new_tokens=65536

|

| 88 |

+

)

|

| 89 |

+

output_ids = generated_ids[0][len(model_inputs.input_ids[0]):].tolist()

|

| 90 |

+

|

| 91 |

+

content = tokenizer.decode(output_ids, skip_special_tokens=True)

|

| 92 |

+

|

| 93 |

+

print("content:", content)

|

| 94 |

+

```

|

| 95 |

+

|

| 96 |

+

**Note: If you encounter out-of-memory (OOM) issues, consider reducing the context length to a shorter value, such as `32,768`.**

|

| 97 |

+

|

| 98 |

+

For local use, applications such as Ollama, LMStudio, MLX-LM, llama.cpp, and KTransformers have also supported Qwen3.

|

| 99 |

+

|

| 100 |

+

## Agentic Coding

|

| 101 |

+

|

| 102 |

+

Qwen3-Coder-Next excels in tool calling capabilities.

|

| 103 |

+

|

| 104 |

+

You can simply define or use any tools as following example.

|

| 105 |

+

```python

|

| 106 |

+

# Your tool implementation

|

| 107 |

+

def square_the_number(num: float) -> dict:

|

| 108 |

+

return num ** 2

|

| 109 |

+

|

| 110 |

+

# Define Tools

|

| 111 |

+

tools=[

|

| 112 |

+

{

|

| 113 |

+

"type":"function",

|

| 114 |

+

"function":{

|

| 115 |

+

"name": "square_the_number",

|

| 116 |

+

"description": "output the square of the number.",

|

| 117 |

+

"parameters": {

|

| 118 |

+

"type": "object",

|

| 119 |

+

"required": ["input_num"],

|

| 120 |

+

"properties": {

|

| 121 |

+

'input_num': {

|

| 122 |

+

'type': 'number',

|

| 123 |

+

'description': 'input_num is a number that will be squared'

|

| 124 |

+

}

|

| 125 |

+

},

|

| 126 |

+

}

|

| 127 |

+

}

|

| 128 |

+

}

|

| 129 |

+

]

|

| 130 |

+

|

| 131 |

+

from openai import OpenAI

|

| 132 |

+

# Define LLM

|

| 133 |

+

client = OpenAI(

|

| 134 |

+

# Use a custom endpoint compatible with OpenAI API

|

| 135 |

+

base_url='http://localhost:8000/v1', # api_base

|

| 136 |

+

api_key="EMPTY"

|

| 137 |

+

)

|

| 138 |

+

|

| 139 |

+

messages = [{'role': 'user', 'content': 'square the number 1024'}]

|

| 140 |

+

|

| 141 |

+

completion = client.chat.completions.create(

|

| 142 |

+

messages=messages,

|

| 143 |

+

model="Qwen3-Coder-Next",

|

| 144 |

+

max_tokens=65536,

|

| 145 |

+

tools=tools,

|

| 146 |

+

)

|

| 147 |

+

|

| 148 |

+

print(completion.choices[0])

|

| 149 |

+

```

|

| 150 |

+

|

| 151 |

+

## Best Practices

|

| 152 |

+

|

| 153 |

+

To achieve optimal performance, we recommend the following sampling parameters: `temperature=1.0`, `top_p=0.95`, `top_k=40`.

|

| 154 |

+

|

| 155 |

+

|

| 156 |

+

## Citation

|

| 157 |

+

|

| 158 |

+

If you find our work helpful, feel free to give us a cite.

|

| 159 |

+

|

| 160 |

+

```

|

| 161 |

+

@misc{qwen3codernexttechnicalreport,

|

| 162 |

+

title={Qwen3-Coder-Next Technical Report},

|

| 163 |

+

author={Qwen Team},

|

| 164 |

+

year={2026},

|

| 165 |

+

eprint={},

|

| 166 |

+

archivePrefix={arXiv},

|

| 167 |

+

primaryClass={cs.CL},

|

| 168 |

+

url={https://arxiv.org/abs/},

|

| 169 |

+

}

|

| 170 |

+

```

|