RynnBrain: Open Embodied Foundation Models

If you like our project, please give us a star ⭐ on Github for the latest update.

📰 News

- [2026.02.02] Release RynnBrain family weights and inference code.

- [2026.02.02] Add cookbooks for cognition, localization, reasoning, and planning.

✨ Introduction

RynnBrain aims to serve as a physics-aware embodied brain: it observes egocentric scenes, grounds language to physical space and time, and supports downstream robotic systems with reliable localization and planning outputs.

Key Highlights

Comprehensive egocentric understanding

Strong spatial comprehension and egocentric cognition across embodied QA, counting, OCR, and fine-grained video understanding.Diverse spatiotemporal localization

Locates objects, target areas, and predicts trajectories across long episodic context, enabling global spatial awareness.Physical-space grounded reasoning (RynnBrain family)

The broader RynnBrain family includes “Thinking” variants that interleave textual reasoning with spatial grounding to anchor reasoning in reality.Physics-aware precise planning (RynnBrain family)

Integrates localized affordances/areas/objects into planning outputs to provide downstream VLA models with precise instructions.

🌎 Model Zoo

| Model | Base Model | Huggingface | Modelscope |

|---|---|---|---|

| RynnBrain-2B | Qwen3-VL-2B-Instruct | Link | Link |

| RynnBrain-8B (This Checkpoint) | Qwen3-VL-8B-Instruct | Link | Link |

| RynnBrain-30B-A3B | Qwen3-VL-30B-A3B-Instruct | Link | Link |

| RynnBrain‑CoP-8B | RynnBrain-8B | Link | Link |

| RynnBrain‑Plan-8B | RynnBrain-8B | Link | Link |

| RynnBrain‑Plan-30B-A3B | RynnBrain-30B-A3B | Link | Link |

| RynnBrain‑Nav-8B | RynnBrain-8B | Link | Link |

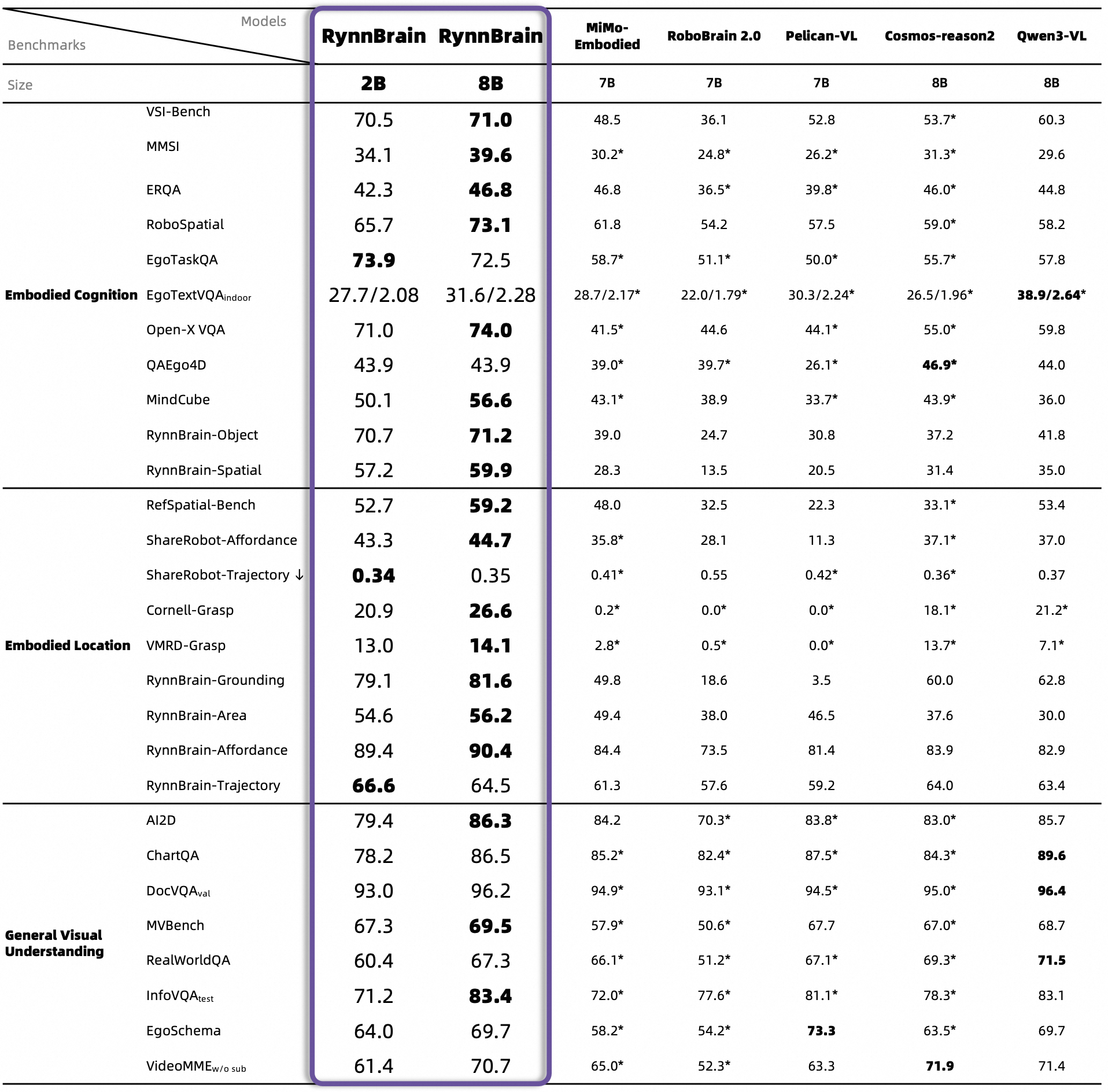

🚀 Main Results

🤖 Quick Start

Minimal dependencies:

pip install transformers==4.57.1

Run text generation:

from transformers import AutoModelForImageTextToText

model = AutoModelForImageTextToText.from_pretrained("")

...

Cookbooks

Checkout the cookbooks that showcase RynnBrain's capabilities in cognition, localization, reasoning, and planning.

| Category | Cookbook name | Description |

|---|---|---|

| Cognition | 1_spatial_understanding.ipynb | Shows the ability of model for spaital understanding in the video scene. |

| Cognition | 2_object_understanding.ipynb | Shows how the model understands object categories, attributes, and relations and counting ability. |

| Cognition | 3_ocr.ipynb | Examples of optical character recognition and text understanding in videos. |

| Location | 4_object_location.ipynb | Locates specific objects with bounding boxes in an image or video based on instructions. |

| Location | 5_area_location.ipynb | Identifies and marks specified regions by points in an image or video. |

| Location | 6_affordance_location.ipynb | Finds areas or objects with specific affordances in an image of video. |

| Location | 7_trajectory_location.ipynb | Infers and annotates trajectories or motion paths in an image or video. |

| Location | 8_grasp_pose.ipynb | Present the model's abiltiy to predict robotic grasp poses from images. |

📑 Citation

If you find RynnBrain useful for your research and applications, please cite using this BibTeX:

@article{damo2026rynnbrain,

title={RynnBrain: Open Embodied Foundation Models},

author={Ronghao Dang, Jiayan Guo, Bohan Hou, Sicong Leng, Kehan Li, Xin Li, Jiangpin Liu, Yunxuan Mao, Zhikai Wang, Yuqian Yuan, Minghao Zhu, Xiao Lin, Yang Bai, Qian Jiang, Yaxi Zhao, Minghua Zeng, Junlong Gao, Yuming Jiang, Jun Cen, Siteng Huang, Liuyi Wang, Wenqiao Zhang, Chengju Liu, Jianfei Yang, Shijian Lu, Deli Zhao},

journal={arXiv preprint arXiv:2602.14979v1},

year={2026},

url = {https://arxiv.org/abs/2602.14979v1}

}

- Downloads last month

- 293