Spaces:

Sleeping

Sleeping

Upload folder using huggingface_hub

Browse filesThis view is limited to 50 files because it contains too many changes.

See raw diff

- LICENSE +197 -0

- MANIFEST.in +39 -0

- README.md +203 -8

- cli/README.md +167 -0

- cli/__init__.py +5 -0

- cli/main.py +238 -0

- demo_server.py +321 -0

- deploy_setup.py +43 -0

- lf_algorithm/__init__.py +46 -0

- lf_algorithm/__pycache__/__init__.cpython-313.pyc +0 -0

- lf_algorithm/__pycache__/agent_manager.cpython-313.pyc +0 -0

- lf_algorithm/__pycache__/framework_agent.cpython-313.pyc +0 -0

- lf_algorithm/agent_manager.py +84 -0

- lf_algorithm/framework_agent.py +130 -0

- lf_algorithm/models/__pycache__/models.cpython-313.pyc +0 -0

- lf_algorithm/models/models.py +285 -0

- lf_algorithm/plugins/__init__.py +1 -0

- lf_algorithm/plugins/__pycache__/__init__.cpython-313.pyc +0 -0

- lf_algorithm/plugins/airflow_lineage_agent/__init__.py +1 -0

- lf_algorithm/plugins/airflow_lineage_agent/__pycache__/__init__.cpython-313.pyc +0 -0

- lf_algorithm/plugins/airflow_lineage_agent/__pycache__/airflow_instructions.cpython-313.pyc +0 -0

- lf_algorithm/plugins/airflow_lineage_agent/__pycache__/lineage_agent.cpython-313.pyc +0 -0

- lf_algorithm/plugins/airflow_lineage_agent/airflow_instructions.py +98 -0

- lf_algorithm/plugins/airflow_lineage_agent/lineage_agent.py +98 -0

- lf_algorithm/plugins/airflow_lineage_agent/mcp_servers/__init__.py +0 -0

- lf_algorithm/plugins/airflow_lineage_agent/mcp_servers/__pycache__/__init__.cpython-313.pyc +0 -0

- lf_algorithm/plugins/airflow_lineage_agent/mcp_servers/__pycache__/mcp_params.cpython-313.pyc +0 -0

- lf_algorithm/plugins/airflow_lineage_agent/mcp_servers/mcp_airflow_lineage/__init__.py +0 -0

- lf_algorithm/plugins/airflow_lineage_agent/mcp_servers/mcp_airflow_lineage/lineage_airflow_server.py +55 -0

- lf_algorithm/plugins/airflow_lineage_agent/mcp_servers/mcp_airflow_lineage/templates.py +777 -0

- lf_algorithm/plugins/airflow_lineage_agent/mcp_servers/mcp_params.py +9 -0

- lf_algorithm/plugins/java_lineage_agent/__init__.py +1 -0

- lf_algorithm/plugins/java_lineage_agent/__pycache__/__init__.cpython-313.pyc +0 -0

- lf_algorithm/plugins/java_lineage_agent/__pycache__/java_instructions.cpython-313.pyc +0 -0

- lf_algorithm/plugins/java_lineage_agent/__pycache__/lineage_agent.cpython-313.pyc +0 -0

- lf_algorithm/plugins/java_lineage_agent/java_instructions.py +98 -0

- lf_algorithm/plugins/java_lineage_agent/lineage_agent.py +97 -0

- lf_algorithm/plugins/java_lineage_agent/mcp_servers/__init__.py +0 -0

- lf_algorithm/plugins/java_lineage_agent/mcp_servers/__pycache__/__init__.cpython-313.pyc +0 -0

- lf_algorithm/plugins/java_lineage_agent/mcp_servers/__pycache__/mcp_params.cpython-313.pyc +0 -0

- lf_algorithm/plugins/java_lineage_agent/mcp_servers/mcp_java_lineage/__init__.py +0 -0

- lf_algorithm/plugins/java_lineage_agent/mcp_servers/mcp_java_lineage/lineage_java_server.py +55 -0

- lf_algorithm/plugins/java_lineage_agent/mcp_servers/mcp_java_lineage/templates.py +605 -0

- lf_algorithm/plugins/java_lineage_agent/mcp_servers/mcp_params.py +9 -0

- lf_algorithm/plugins/python_lineage_agent/__init__.py +1 -0

- lf_algorithm/plugins/python_lineage_agent/__pycache__/__init__.cpython-313.pyc +0 -0

- lf_algorithm/plugins/python_lineage_agent/__pycache__/lineage_agent.cpython-313.pyc +0 -0

- lf_algorithm/plugins/python_lineage_agent/__pycache__/python_instructions.cpython-313.pyc +0 -0

- lf_algorithm/plugins/python_lineage_agent/lineage_agent.py +97 -0

- lf_algorithm/plugins/python_lineage_agent/mcp_servers/__init__.py +0 -0

LICENSE

ADDED

|

@@ -0,0 +1,197 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

Apache License

|

| 2 |

+

Version 2.0, January 2004

|

| 3 |

+

http://www.apache.org/licenses/

|

| 4 |

+

|

| 5 |

+

TERMS AND CONDITIONS FOR USE, REPRODUCTION, AND DISTRIBUTION

|

| 6 |

+

|

| 7 |

+

1. Definitions.

|

| 8 |

+

|

| 9 |

+

"License" shall mean the terms and conditions for use, reproduction,

|

| 10 |

+

and distribution as defined by Sections 1 through 9 of this document.

|

| 11 |

+

|

| 12 |

+

"Licensor" shall mean the copyright owner or entity granting the License.

|

| 13 |

+

|

| 14 |

+

"Legal Entity" shall mean the union of the acting entity and all

|

| 15 |

+

other entities that control, are controlled by, or are under common

|

| 16 |

+

control with that entity. For the purposes of this definition,

|

| 17 |

+

"control" means (i) the power, direct or indirect, to cause the

|

| 18 |

+

direction or management of such entity, whether by contract or

|

| 19 |

+

otherwise, or (ii) ownership of fifty percent (50%) or more of the

|

| 20 |

+

outstanding shares, or (iii) beneficial ownership of such entity.

|

| 21 |

+

|

| 22 |

+

"You" (or "Your") shall mean an individual or Legal Entity

|

| 23 |

+

exercising permissions granted by this License.

|

| 24 |

+

|

| 25 |

+

"Source" form shall mean the preferred form for making modifications,

|

| 26 |

+

including but not limited to software source code, documentation

|

| 27 |

+

source, and configuration files.

|

| 28 |

+

|

| 29 |

+

"Object" form shall mean any form resulting from mechanical

|

| 30 |

+

transformation or translation of a Source form, including but

|

| 31 |

+

not limited to compiled object code, generated documentation,

|

| 32 |

+

and conversions to other media types.

|

| 33 |

+

|

| 34 |

+

"Work" shall mean the work of authorship, whether in Source or

|

| 35 |

+

Object form, made available under the License, as indicated by a

|

| 36 |

+

copyright notice that is included in or attached to the work

|

| 37 |

+

(which shall not include communications that are clearly marked or

|

| 38 |

+

otherwise designated in writing by the copyright owner as "Not a Contribution").

|

| 39 |

+

|

| 40 |

+

"Contribution" shall mean any work of authorship, including

|

| 41 |

+

the original version of the Work and any modifications or additions

|

| 42 |

+

to that Work or Derivative Works thereof, that is intentionally

|

| 43 |

+

submitted to Licensor for inclusion in the Work by the copyright owner

|

| 44 |

+

or by an individual or Legal Entity authorized to submit on behalf of

|

| 45 |

+

the copyright owner. For the purposes of this definition, "submitted"

|

| 46 |

+

means any form of electronic, verbal, or written communication sent

|

| 47 |

+

to the Licensor or its representatives, including but not limited to

|

| 48 |

+

communication on electronic mailing lists, source code control systems,

|

| 49 |

+

and issue tracking systems that are managed by, or on behalf of, the

|

| 50 |

+

Licensor for the purpose of discussing and improving the Work, but

|

| 51 |

+

excluding communication that is conspicuously marked or otherwise

|

| 52 |

+

designated in writing by the copyright owner as "Not a Contribution."

|

| 53 |

+

|

| 54 |

+

"Contributor" shall mean Licensor and any individual or Legal Entity

|

| 55 |

+

on behalf of whom a Contribution has been received by Licensor and

|

| 56 |

+

subsequently incorporated within the Work.

|

| 57 |

+

|

| 58 |

+

2. Grant of Copyright License. Subject to the terms and conditions of

|

| 59 |

+

this License, each Contributor hereby grants to You a perpetual,

|

| 60 |

+

worldwide, non-exclusive, no-charge, royalty-free, irrevocable

|

| 61 |

+

copyright license to use, reproduce, modify, merge, publish,

|

| 62 |

+

distribute, sublicense, and/or sell copies of the Work, and to

|

| 63 |

+

permit persons to whom the Work is furnished to do so, subject to

|

| 64 |

+

the following conditions:

|

| 65 |

+

|

| 66 |

+

The above copyright notice and this permission notice shall be

|

| 67 |

+

included in all copies or substantial portions of the Work.

|

| 68 |

+

|

| 69 |

+

3. Grant of Patent License. Subject to the terms and conditions of

|

| 70 |

+

this License, each Contributor hereby grants to You a perpetual,

|

| 71 |

+

worldwide, non-exclusive, no-charge, royalty-free, irrevocable

|

| 72 |

+

(except as stated in this section) patent license to make, have made,

|

| 73 |

+

use, offer to sell, sell, import, and otherwise transfer the Work,

|

| 74 |

+

where such license applies only to those patent claims licensable

|

| 75 |

+

by such Contributor that are necessarily infringed by their

|

| 76 |

+

Contribution(s) alone or by combination of their Contribution(s)

|

| 77 |

+

with the Work to which such Contribution(s) was submitted. If You

|

| 78 |

+

institute patent litigation against any entity (including a

|

| 79 |

+

cross-claim or counterclaim in a lawsuit) alleging that the Work

|

| 80 |

+

or a Contribution incorporated within the Work constitutes direct

|

| 81 |

+

or contributory patent infringement, then any patent licenses

|

| 82 |

+

granted to You under this License for that Work shall terminate

|

| 83 |

+

as of the date such litigation is filed.

|

| 84 |

+

|

| 85 |

+

4. Redistribution. You may reproduce and distribute copies of the

|

| 86 |

+

Work or Derivative Works thereof in any medium, with or without

|

| 87 |

+

modifications, and in Source or Object form, provided that You

|

| 88 |

+

meet the following conditions:

|

| 89 |

+

|

| 90 |

+

(a) You must give any other recipients of the Work or

|

| 91 |

+

Derivative Works a copy of this License; and

|

| 92 |

+

|

| 93 |

+

(b) You must cause any modified files to carry prominent notices

|

| 94 |

+

stating that You changed the files; and

|

| 95 |

+

|

| 96 |

+

(c) You must retain, in the Source form of any Derivative Works

|

| 97 |

+

that You distribute, all copyright, trademark, patent, and

|

| 98 |

+

other attribution notices from the Source form of the Work,

|

| 99 |

+

excluding those notices that do not pertain to any part of

|

| 100 |

+

the Derivative Works; and

|

| 101 |

+

|

| 102 |

+

(d) If the Work includes a "NOTICE" file as part of its

|

| 103 |

+

distribution, then any Derivative Works that You distribute must

|

| 104 |

+

include a readable copy of the attribution notices contained

|

| 105 |

+

within such NOTICE file, excluding those notices that do not

|

| 106 |

+

pertain to any part of the Derivative Works, in at least one

|

| 107 |

+

of the following places: within a NOTICE file distributed

|

| 108 |

+

as part of the Derivative Works; within the Source form or

|

| 109 |

+

documentation, if provided along with the Derivative Works; or,

|

| 110 |

+

within a display generated by the Derivative Works, if and

|

| 111 |

+

wherever such third-party notices normally appear. The contents

|

| 112 |

+

of the NOTICE file are for informational purposes only and

|

| 113 |

+

do not modify the License. You may add Your own attribution

|

| 114 |

+

notices within Derivative Works that You distribute, alongside

|

| 115 |

+

or as an addendum to the NOTICE text from the Work, provided

|

| 116 |

+

that such additional attribution notices cannot be construed

|

| 117 |

+

as modifying the License.

|

| 118 |

+

|

| 119 |

+

You may add Your own copyright notice to Your modifications and

|

| 120 |

+

may provide additional or different license terms and conditions

|

| 121 |

+

for use, reproduction, or distribution of Your modifications, or

|

| 122 |

+

for any such Derivative Works as a whole, provided Your use,

|

| 123 |

+

reproduction, and distribution of the Work otherwise complies with

|

| 124 |

+

the conditions stated in this License.

|

| 125 |

+

|

| 126 |

+

5. Submission of Contributions. Unless You explicitly state otherwise,

|

| 127 |

+

any Contribution intentionally submitted for inclusion in the Work

|

| 128 |

+

by You to the Licensor shall be under the terms and conditions of

|

| 129 |

+

this License, without any additional terms or conditions.

|

| 130 |

+

Notwithstanding the above, nothing herein shall supersede or modify

|

| 131 |

+

the terms of any separate license agreement you may have executed

|

| 132 |

+

with Licensor regarding such Contributions.

|

| 133 |

+

|

| 134 |

+

6. Trademarks. This License does not grant permission to use the trade

|

| 135 |

+

names, trademarks, service marks, or product names of the Licensor,

|

| 136 |

+

except as required for reasonable and customary use in describing the

|

| 137 |

+

origin of the Work and reproducing the content of the NOTICE file.

|

| 138 |

+

|

| 139 |

+

7. Disclaimer of Warranty. Unless required by applicable law or

|

| 140 |

+

agreed to in writing, Licensor provides the Work (and each

|

| 141 |

+

Contributor provides its Contributions) on an "AS IS" BASIS,

|

| 142 |

+

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or

|

| 143 |

+

implied, including, without limitation, any warranties or conditions

|

| 144 |

+

of TITLE, NON-INFRINGEMENT, MERCHANTABILITY, or FITNESS FOR A

|

| 145 |

+

PARTICULAR PURPOSE. You are solely responsible for determining the

|

| 146 |

+

appropriateness of using or redistributing the Work and assume any

|

| 147 |

+

risks associated with Your exercise of permissions under this License.

|

| 148 |

+

|

| 149 |

+

8. Limitation of Liability. In no event and under no legal theory,

|

| 150 |

+

whether in tort (including negligence), contract, or otherwise,

|

| 151 |

+

unless required by applicable law (such as deliberate and grossly

|

| 152 |

+

negligent acts) or agreed to in writing, shall any Contributor be

|

| 153 |

+

liable to You for damages, including any direct, indirect, special,

|

| 154 |

+

incidental, or consequential damages of any character arising as a

|

| 155 |

+

result of this License or out of the use or inability to use the

|

| 156 |

+

Work (including but not limited to damages for loss of goodwill,

|

| 157 |

+

work stoppage, computer failure or malfunction, or any and all

|

| 158 |

+

other commercial damages or losses), even if such Contributor

|

| 159 |

+

has been advised of the possibility of such damages.

|

| 160 |

+

|

| 161 |

+

9. Accepting Warranty or Additional Liability. When redistributing

|

| 162 |

+

the Work or Derivative Works thereof, You may choose to offer,

|

| 163 |

+

and to charge a fee for, acceptance of support, warranty, indemnity,

|

| 164 |

+

or other liability obligations and/or rights consistent with this

|

| 165 |

+

License. However, in accepting such obligations, You may act only

|

| 166 |

+

on Your own behalf and on Your sole responsibility, not on behalf

|

| 167 |

+

of any other Contributor, and only if You agree to indemnify,

|

| 168 |

+

defend, and hold each Contributor harmless for any liability

|

| 169 |

+

incurred by, or claims asserted against, such Contributor by reason

|

| 170 |

+

of your accepting any such warranty or additional liability.

|

| 171 |

+

|

| 172 |

+

END OF TERMS AND CONDITIONS

|

| 173 |

+

|

| 174 |

+

APPENDIX: How to apply the Apache License to your work.

|

| 175 |

+

|

| 176 |

+

To apply the Apache License to your work, attach the following

|

| 177 |

+

boilerplate notice, with the fields enclosed by brackets "[]"

|

| 178 |

+

replaced with your own identifying information. (Don't include

|

| 179 |

+

the brackets!) The text should be enclosed in the appropriate

|

| 180 |

+

comment syntax for the file format. We also recommend that a

|

| 181 |

+

file or class name and description of purpose be included on the

|

| 182 |

+

same page as the copyright notice for easier identification within

|

| 183 |

+

third-party archives.

|

| 184 |

+

|

| 185 |

+

Copyright [yyyy] [name of copyright owner]

|

| 186 |

+

|

| 187 |

+

Licensed under the Apache License, Version 2.0 (the "License");

|

| 188 |

+

you may not use this file except in compliance with the License.

|

| 189 |

+

You may obtain a copy of the License at

|

| 190 |

+

|

| 191 |

+

http://www.apache.org/licenses/LICENSE-2.0

|

| 192 |

+

|

| 193 |

+

Unless required by applicable law or agreed to in writing, software

|

| 194 |

+

distributed under the License is distributed on an "AS IS" BASIS,

|

| 195 |

+

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

|

| 196 |

+

See the License for the specific language governing permissions and

|

| 197 |

+

limitations under the License.

|

MANIFEST.in

ADDED

|

@@ -0,0 +1,39 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

include README.md

|

| 2 |

+

include LICENSE

|

| 3 |

+

include pyproject.toml

|

| 4 |

+

include MANIFEST.in

|

| 5 |

+

|

| 6 |

+

# Include images directory for README.md

|

| 7 |

+

recursive-include images *

|

| 8 |

+

|

| 9 |

+

# Include package data

|

| 10 |

+

recursive-include algorithm *.json

|

| 11 |

+

recursive-include algorithm *.yaml

|

| 12 |

+

recursive-include algorithm *.yml

|

| 13 |

+

recursive-include algorithm *.txt

|

| 14 |

+

recursive-include algorithm *.md

|

| 15 |

+

recursive-include cli *.json

|

| 16 |

+

recursive-include cli *.yaml

|

| 17 |

+

recursive-include cli *.yml

|

| 18 |

+

recursive-include cli *.txt

|

| 19 |

+

recursive-include cli *.md

|

| 20 |

+

|

| 21 |

+

|

| 22 |

+

# Include templates and configuration files

|

| 23 |

+

recursive-include lf_algorithm/plugins/*/mcp_servers/*/templates.py

|

| 24 |

+

recursive-include lf_algorithm/plugins/*/mcp_servers/*/mcp_params.py

|

| 25 |

+

|

| 26 |

+

# Exclude development files

|

| 27 |

+

global-exclude *.pyc

|

| 28 |

+

global-exclude *.pyo

|

| 29 |

+

global-exclude __pycache__

|

| 30 |

+

global-exclude .DS_Store

|

| 31 |

+

global-exclude *.log

|

| 32 |

+

global-exclude .pytest_cache

|

| 33 |

+

global-exclude .mypy_cache

|

| 34 |

+

global-exclude .venv

|

| 35 |

+

global-exclude venv

|

| 36 |

+

global-exclude env

|

| 37 |

+

global-exclude .env

|

| 38 |

+

global-exclude .pypirc

|

| 39 |

+

global-exclude .ruff_cache

|

README.md

CHANGED

|

@@ -1,12 +1,207 @@

|

|

| 1 |

---

|

| 2 |

-

title:

|

| 3 |

-

|

| 4 |

-

colorFrom: blue

|

| 5 |

-

colorTo: purple

|

| 6 |

sdk: gradio

|

| 7 |

-

sdk_version: 5.

|

| 8 |

-

app_file: app.py

|

| 9 |

-

pinned: false

|

| 10 |

---

|

| 11 |

|

| 12 |

-

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

---

|

| 2 |

+

title: lineagentic-flow

|

| 3 |

+

app_file: start_demo_server.py

|

|

|

|

|

|

|

| 4 |

sdk: gradio

|

| 5 |

+

sdk_version: 5.39.0

|

|

|

|

|

|

|

| 6 |

---

|

| 7 |

|

| 8 |

+

<div align="center">

|

| 9 |

+

<img src="https://raw.githubusercontent.com/lineagentic/lineagentic-flow/main/images/logo.jpg" alt="Lineagentic Logo" width="880" height="300">

|

| 10 |

+

</div>

|

| 11 |

+

|

| 12 |

+

## Lineagentic-flow

|

| 13 |

+

|

| 14 |

+

Lineagentic-flow is an agentic ai solution for building end-to-end data lineage across diverse types of data processing scripts across different platforms. It is designed to be modular and customizable, and can be extended to support new data processing script types. In a nutshell this is what it does:

|

| 15 |

+

|

| 16 |

+

```

|

| 17 |

+

┌─────────────┐ ┌───────────────────────────────┐ ┌────────────---───┐

|

| 18 |

+

│ source-code │───▶│ lineagentic-flow-algorithm │───▶│ lineage output │

|

| 19 |

+

│ │ │ │ │ │

|

| 20 |

+

└─────────────┘ └───────────────────────────────┘ └──────────────---─┘

|

| 21 |

+

```

|

| 22 |

+

### Features

|

| 23 |

+

|

| 24 |

+

- Plugin based design pattern, simple to extend and customize.

|

| 25 |

+

- Command line interface for quick analysis.

|

| 26 |

+

- Support for multiple data processing script types (SQL, Python, Airflow Spark, etc.)

|

| 27 |

+

- Simple demo server to run locally and in huggingface spaces.

|

| 28 |

+

|

| 29 |

+

## Quick Start

|

| 30 |

+

|

| 31 |

+

### Installation

|

| 32 |

+

|

| 33 |

+

Install the package from PyPI:

|

| 34 |

+

|

| 35 |

+

```bash

|

| 36 |

+

pip install lineagentic-flow

|

| 37 |

+

```

|

| 38 |

+

|

| 39 |

+

### Basic Usage

|

| 40 |

+

|

| 41 |

+

```python

|

| 42 |

+

import asyncio

|

| 43 |

+

from lf_algorithm.framework_agent import FrameworkAgent

|

| 44 |

+

import logging

|

| 45 |

+

|

| 46 |

+

logging.basicConfig(

|

| 47 |

+

level=logging.INFO,

|

| 48 |

+

format='%(asctime)s - %(name)s - %(levelname)s - %(message)s'

|

| 49 |

+

)

|

| 50 |

+

|

| 51 |

+

async def main():

|

| 52 |

+

# Create an agent for SQL lineage extraction

|

| 53 |

+

agent = FrameworkAgent(

|

| 54 |

+

agent_name="sql-lineage-agent",

|

| 55 |

+

model_name="gpt-4o-mini",

|

| 56 |

+

source_code="SELECT id, name FROM users WHERE active = true"

|

| 57 |

+

)

|

| 58 |

+

|

| 59 |

+

# Run the agent to extract lineage

|

| 60 |

+

result = await agent.run_agent()

|

| 61 |

+

print(result)

|

| 62 |

+

|

| 63 |

+

# Run the example

|

| 64 |

+

asyncio.run(main())

|

| 65 |

+

```

|

| 66 |

+

### Supported Agents

|

| 67 |

+

|

| 68 |

+

Following table shows the current development agents in Lineagentic-flow algorithm:

|

| 69 |

+

|

| 70 |

+

|

| 71 |

+

| **Agent Name** | **Done** | **Under Development** | **In Backlog** | **Comment** |

|

| 72 |

+

|----------------------|:--------:|:----------------------:|:--------------:|--------------------------------------|

|

| 73 |

+

| python-lineage_agent | ✓ | | | |

|

| 74 |

+

| airflow_lineage_agent | ✓ | | | |

|

| 75 |

+

| java_lineage_agent | ✓ | | | |

|

| 76 |

+

| spark_lineage_agent | ✓ | | | |

|

| 77 |

+

| sql_lineage_agent | ✓ | | | |

|

| 78 |

+

| flink_lineage_agent | | | ✓ | |

|

| 79 |

+

| beam_lineage_agent | | | ✓ | |

|

| 80 |

+

| shell_lineage_agent | | | ✓ | |

|

| 81 |

+

| scala_lineage_agent | | | ✓ | |

|

| 82 |

+

| dbt_lineage_agent | | | ✓ | |

|

| 83 |

+

|

| 84 |

+

|

| 85 |

+

### Environment Variables

|

| 86 |

+

|

| 87 |

+

Set your API keys:

|

| 88 |

+

|

| 89 |

+

```bash

|

| 90 |

+

export OPENAI_API_KEY="your-openai-api-key"

|

| 91 |

+

export HF_TOKEN="your-huggingface-token" # Optional

|

| 92 |

+

```

|

| 93 |

+

|

| 94 |

+

## What are the components of Lineagentic-flow?

|

| 95 |

+

|

| 96 |

+

- Algorithm module: This is the brain of the Lineagentic-flow. It contains agents, which are implemented as plugins and acting as chain of thought process to extract lineage from different types of data processing scripts. The module is built using a plugin-based design pattern, allowing you to easily develop and integrate your own custom agents.

|

| 97 |

+

|

| 98 |

+

- CLI module: is for command line around algorithm API and connect to unified service layer

|

| 99 |

+

|

| 100 |

+

- Demo module: is for teams who want to demo Lineagentic-flow in fast and simple way deployable into huggingface spaces.

|

| 101 |

+

|

| 102 |

+

#### Command Line Interface (CLI)

|

| 103 |

+

|

| 104 |

+

Lineagentic-flow provides a powerful CLI tool for quick analysis:

|

| 105 |

+

|

| 106 |

+

```bash

|

| 107 |

+

# Basic SQL query analysis

|

| 108 |

+

lineagentic analyze --agent-name sql-lineage-agent --query "SELECT user_id, name FROM users WHERE active = true" --verbose

|

| 109 |

+

|

| 110 |

+

# Analyze with lineage configuration

|

| 111 |

+

lineagentic analyze --agent-name python-lineage-agent --query-file "my_script.py" --verbose

|

| 112 |

+

|

| 113 |

+

```

|

| 114 |

+

for more details see [CLI documentation](cli/README.md).

|

| 115 |

+

|

| 116 |

+

### environment variables

|

| 117 |

+

|

| 118 |

+

- HF_TOKEN (HUGGINGFACE_TOKEN)

|

| 119 |

+

- OPENAI_API_KEY

|

| 120 |

+

|

| 121 |

+

### Architecture

|

| 122 |

+

|

| 123 |

+

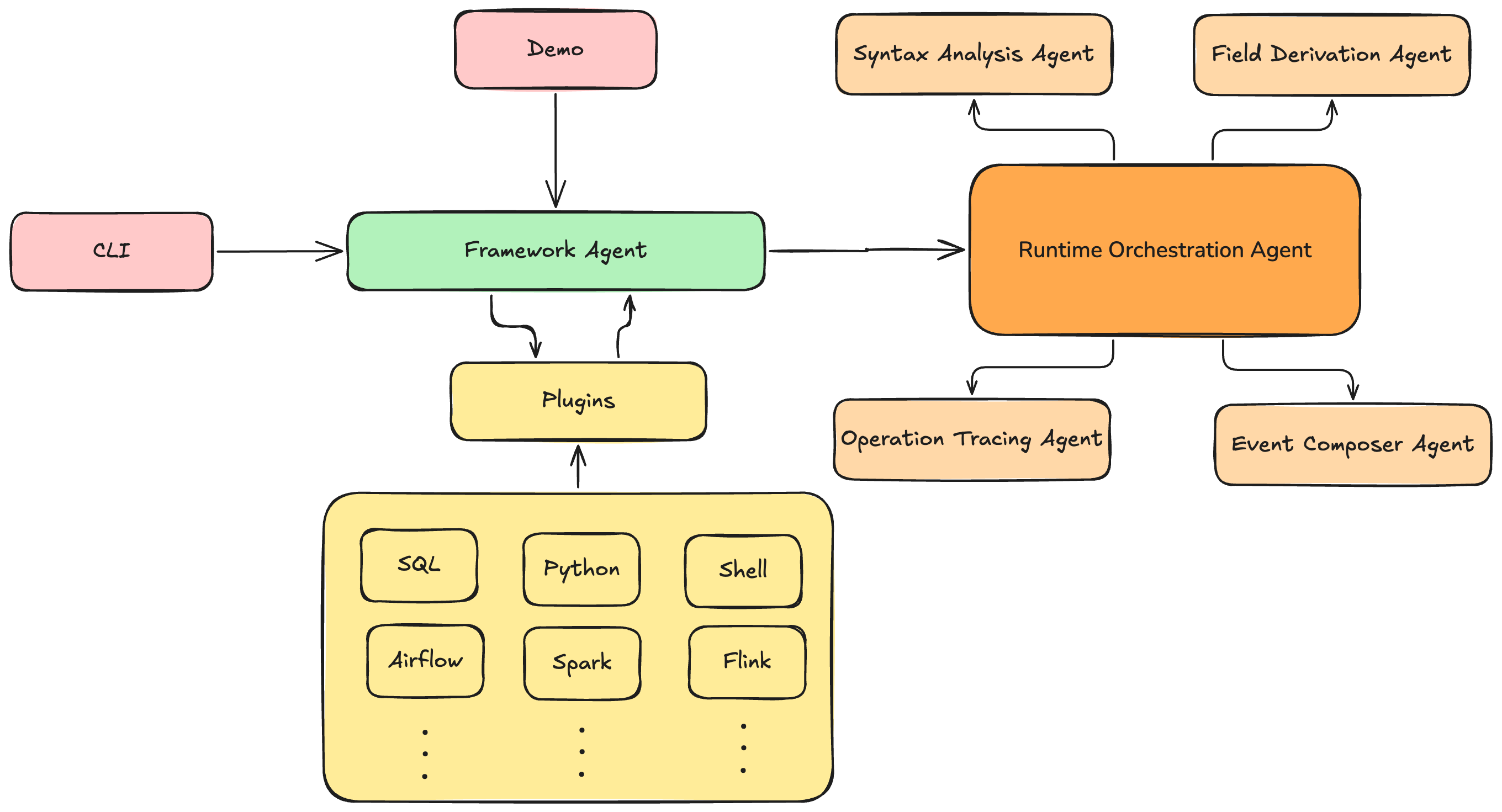

The following figure illustrates the architecture behind the Lineagentic-flow, which is essentially a multi-layer architecture of backend and agentic AI algorithm that leverages a chain-of-thought process to construct lineage across various script types.

|

| 124 |

+

|

| 125 |

+

|

| 126 |

+

|

| 127 |

+

|

| 128 |

+

## Mathematic behind algorithm

|

| 129 |

+

|

| 130 |

+

Following shows mathematic behind each layer of algorithm.

|

| 131 |

+

|

| 132 |

+

### Agent framework

|

| 133 |

+

The agent framework dose IO operations ,memory management, and prompt engineering according to the script type (T) and its content (C).

|

| 134 |

+

|

| 135 |

+

$$

|

| 136 |

+

P := f(T, C)

|

| 137 |

+

$$

|

| 138 |

+

|

| 139 |

+

## Runtime orchestration agent

|

| 140 |

+

|

| 141 |

+

The runtime orchestration agent orchestrates the execution of the required agents provided by the agent framework (P) by selecting the appropriate agent (A) and its corresponding task (T).

|

| 142 |

+

|

| 143 |

+

$$

|

| 144 |

+

G=h([\{(A_1, T_1), (A_2, T_2), (A_3, T_3), (A_4, T_4)\}],P)

|

| 145 |

+

$$

|

| 146 |

+

|

| 147 |

+

## Syntax Analysis Agent

|

| 148 |

+

|

| 149 |

+

Syntax Analysis agent, analyzes the syntactic structure of the raw script to identify subqueries and nested structures and decompose the script into multiple subscripts.

|

| 150 |

+

|

| 151 |

+

$$

|

| 152 |

+

\{sa1,⋯,san\}:=h([A_1,T_1],P)

|

| 153 |

+

$$

|

| 154 |

+

|

| 155 |

+

## Field Derivation Agent

|

| 156 |

+

The Field Derivation agent processes each subscript from syntax analysis agent to derive field-level mapping relationships and processing logic.

|

| 157 |

+

|

| 158 |

+

$$

|

| 159 |

+

\{fd1,⋯,fdn\}:=h([A_2,T_2],\{sa1,⋯,san\})

|

| 160 |

+

$$

|

| 161 |

+

|

| 162 |

+

## Operation Tracing Agent

|

| 163 |

+

The Operation Tracing agent analyzes the complex conditions within each subscript identified in syntax analysis agent including filter conditions, join conditions, grouping conditions, and sorting conditions.

|

| 164 |

+

|

| 165 |

+

$$

|

| 166 |

+

\{ot1,⋯,otn\}:=h([A_3,T_3],\{sa1,⋯,san\})

|

| 167 |

+

$$

|

| 168 |

+

|

| 169 |

+

## Event Composer Agent

|

| 170 |

+

The Event Composer agent consolidates the results from the syntax analysis agent, the field derivation agent and the operation tracing agent to generate the final lineage result.

|

| 171 |

+

|

| 172 |

+

$$

|

| 173 |

+

\{A\}:=h([A_4,T_4],\{sa1,⋯,san\},\{fd1,⋯,fdn\},\{ot1,⋯,otn\})

|

| 174 |

+

$$

|

| 175 |

+

|

| 176 |

+

|

| 177 |

+

|

| 178 |

+

## Activation and Deployment

|

| 179 |

+

|

| 180 |

+

To simplify the usage of Lineagentic-flow, a Makefile has been created to manage various activation and deployment tasks. You can explore the available targets directly within the Makefile. Here you can find different strategies but for more details look into Makefile.

|

| 181 |

+

|

| 182 |

+

1- to start demo server:

|

| 183 |

+

|

| 184 |

+

```bash

|

| 185 |

+

make start-demo-server

|

| 186 |

+

```

|

| 187 |

+

2- to do all tests:

|

| 188 |

+

|

| 189 |

+

```bash

|

| 190 |

+

make test

|

| 191 |

+

```

|

| 192 |

+

3- to build package:

|

| 193 |

+

|

| 194 |

+

```bash

|

| 195 |

+

make build-package

|

| 196 |

+

```

|

| 197 |

+

4- to clean all stack:

|

| 198 |

+

|

| 199 |

+

```bash

|

| 200 |

+

make clean-all-stack

|

| 201 |

+

```

|

| 202 |

+

|

| 203 |

+

5- In order to deploy Lineagentic-flow to Hugging Face Spaces, run the following command ( you need to have huggingface account and put secret keys there if you are going to use paid models):

|

| 204 |

+

|

| 205 |

+

```bash

|

| 206 |

+

make gradio-deploy

|

| 207 |

+

```

|

cli/README.md

ADDED

|

@@ -0,0 +1,167 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# Lineagentic-flow CLI

|

| 2 |

+

|

| 3 |

+

A command-line interface for the Lineagentic-flow framework that provides agentic data lineage parsing across various data processing script types.

|

| 4 |

+

|

| 5 |

+

## Installation

|

| 6 |

+

|

| 7 |

+

The CLI is automatically installed when you install the lineagentic-flow package:

|

| 8 |

+

|

| 9 |

+

```bash

|

| 10 |

+

pip install -e .

|

| 11 |

+

```

|

| 12 |

+

|

| 13 |

+

## Usage

|

| 14 |

+

|

| 15 |

+

The CLI provides two main commands: `analyze` and `field-lineage`.

|

| 16 |

+

|

| 17 |

+

### Basic Commands

|

| 18 |

+

|

| 19 |

+

#### Analyze Query/Code for Lineage

|

| 20 |

+

```bash

|

| 21 |

+

lineagentic analyze --agent-name sql-lineage-agent --query "your code here"

|

| 22 |

+

```

|

| 23 |

+

|

| 24 |

+

|

| 25 |

+

### Running Analysis

|

| 26 |

+

|

| 27 |

+

#### Using a Specific Agent

|

| 28 |

+

```bash

|

| 29 |

+

lineagentic analyze --agent-name sql-lineage-agent --query "SELECT a,b FROM table1"

|

| 30 |

+

```

|

| 31 |

+

|

| 32 |

+

#### Using a File as Input

|

| 33 |

+

```bash

|

| 34 |

+

lineagentic analyze --agent-name python-lineage-agent --query-file path/to/your/script.py

|

| 35 |

+

```

|

| 36 |

+

|

| 37 |

+

#### Specifying a Different Model

|

| 38 |

+

```bash

|

| 39 |

+

lineagentic analyze --agent-name airflow-lineage-agent --model-name gpt-4o --query "your code here"

|

| 40 |

+

```

|

| 41 |

+

|

| 42 |

+

#### With Lineage Configuration

|

| 43 |

+

```bash

|

| 44 |

+

lineagentic analyze --agent-name sql-lineage-agent --query "SELECT * FROM users" --job-namespace "my-namespace" --job-name "my-job"

|

| 45 |

+

```

|

| 46 |

+

|

| 47 |

+

### Output Options

|

| 48 |

+

|

| 49 |

+

#### Pretty Print Results

|

| 50 |

+

```bash

|

| 51 |

+

lineagentic analyze --agent-name sql --query "your code" --pretty

|

| 52 |

+

```

|

| 53 |

+

|

| 54 |

+

#### Save Results to File

|

| 55 |

+

```bash

|

| 56 |

+

lineagentic analyze --agent-name sql --query "your code" --output results.json

|

| 57 |

+

```

|

| 58 |

+

|

| 59 |

+

#### Save Results with Pretty Formatting

|

| 60 |

+

```bash

|

| 61 |

+

lineagentic analyze --agent-name python --query "your code" --output results.json --pretty

|

| 62 |

+

```

|

| 63 |

+

|

| 64 |

+

#### Enable Verbose Output

|

| 65 |

+

```bash

|

| 66 |

+

lineagentic analyze --agent-name sql --query "your code" --verbose

|

| 67 |

+

```

|

| 68 |

+

|

| 69 |

+

## Available Agents

|

| 70 |

+

|

| 71 |

+

- **sql-lineage-agent**: Analyzes SQL queries and scripts (default)

|

| 72 |

+

- **airflow-lineage-agent**: Analyzes Apache Airflow DAGs and workflows

|

| 73 |

+

- **spark-lineage-agent**: Analyzes Apache Spark jobs

|

| 74 |

+

- **python-lineage-agent**: Analyzes Python data processing scripts

|

| 75 |

+

- **java-lineage-agent**: Analyzes Java data processing code

|

| 76 |

+

|

| 77 |

+

## Commands

|

| 78 |

+

|

| 79 |

+

### `analyze` Command

|

| 80 |

+

|

| 81 |

+

Analyzes a query or code for lineage information.

|

| 82 |

+

|

| 83 |

+

#### Required Arguments

|

| 84 |

+

- Either `--query` or `--query-file` must be specified

|

| 85 |

+

|

| 86 |

+

### Basic Query Analysis

|

| 87 |

+

```bash

|

| 88 |

+

# Simple SQL query analysis

|

| 89 |

+

lineagentic analyze --agent-name sql-lineage-agent --query "SELECT user_id, name FROM users WHERE active = true"

|

| 90 |

+

|

| 91 |

+

# Analyze with specific agent

|

| 92 |

+

lineagentic analyze --agent-name sql-lineage-agent --query "SELECT a, b FROM table1 JOIN table2 ON table1.id = table2.id"

|

| 93 |

+

|

| 94 |

+

# Analyze Python code

|

| 95 |

+

lineagentic analyze --agent-name python-lineage-agent --query "import pandas as pd; df = pd.read_csv('data.csv'); result = df.groupby('category').sum()"

|

| 96 |

+

|

| 97 |

+

# Analyze Java code

|

| 98 |

+

lineagentic analyze --agent-name java-lineage-agent --query "public class DataProcessor { public void processData() { // processing logic } }"

|

| 99 |

+

|

| 100 |

+

# Analyze Spark code

|

| 101 |

+

lineagentic analyze --agent-name spark-lineage-agent --query "val df = spark.read.csv('data.csv'); val result = df.groupBy('category').agg(sum('value'))"

|

| 102 |

+

|

| 103 |

+

# Analyze Airflow DAG

|

| 104 |

+

lineagentic analyze --agent-name airflow-lineage-agent --query "from airflow import DAG; from airflow.operators.python import PythonOperator; dag = DAG('my_dag')"

|

| 105 |

+

```

|

| 106 |

+

|

| 107 |

+

|

| 108 |

+

### Reading from File

|

| 109 |

+

```bash

|

| 110 |

+

# Analyze query from file

|

| 111 |

+

lineagentic analyze --agent-name sql-lineage-agent --query-file "queries/user_analysis.sql"

|

| 112 |

+

|

| 113 |

+

# Analyze Python script from file

|

| 114 |

+

lineagentic analyze --agent-name python-lineage-agent --query-file "scripts/data_processing.py"

|

| 115 |

+

```

|

| 116 |

+

|

| 117 |

+

### Output Options

|

| 118 |

+

```bash

|

| 119 |

+

# Save results to file

|

| 120 |

+

lineagentic analyze --agent-name sql-lineage-agent --query "SELECT * FROM users" --output "results.json"

|

| 121 |

+

|

| 122 |

+

# Pretty print results

|

| 123 |

+

lineagentic analyze --agent-name sql-lineage-agent --query "SELECT * FROM users" --pretty

|

| 124 |

+

|

| 125 |

+

# Verbose output

|

| 126 |

+

lineagentic analyze --agent-name sql-lineage-agent --query "SELECT * FROM users" --verbose

|

| 127 |

+

|

| 128 |

+

# Don't save to database

|

| 129 |

+

lineagentic analyze --agent-name sql-lineage-agent --query "SELECT * FROM users" --no-save

|

| 130 |

+

|

| 131 |

+

# Don't save to Neo4j

|

| 132 |

+

lineagentic analyze --agent-name sql-lineage-agent --query "SELECT * FROM users" --no-neo4j

|

| 133 |

+

```

|

| 134 |

+

|

| 135 |

+

|

| 136 |

+

|

| 137 |

+

## Common Output Options

|

| 138 |

+

|

| 139 |

+

Both commands support these output options:

|

| 140 |

+

|

| 141 |

+

- `--output`: Output file path for results (JSON format)

|

| 142 |

+

- `--pretty`: Pretty print the output

|

| 143 |

+

- `--verbose`: Enable verbose output

|

| 144 |

+

|

| 145 |

+

## Error Handling

|

| 146 |

+

|

| 147 |

+

The CLI provides clear error messages for common issues:

|

| 148 |

+

|

| 149 |

+

- Missing required arguments

|

| 150 |

+

- File not found errors

|

| 151 |

+

- Agent execution errors

|

| 152 |

+

- Invalid agent names

|

| 153 |

+

|

| 154 |

+

## Development

|

| 155 |

+

|

| 156 |

+

To run the CLI in development mode:

|

| 157 |

+

|

| 158 |

+

```bash

|

| 159 |

+

python -m cli.main --help

|

| 160 |

+

```

|

| 161 |

+

|

| 162 |

+

To run a specific command:

|

| 163 |

+

|

| 164 |

+

```bash

|

| 165 |

+

python -m cli.main analyze --agent-name sql --query "SELECT 1" --pretty

|

| 166 |

+

```

|

| 167 |

+

|

cli/__init__.py

ADDED

|

@@ -0,0 +1,5 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

"""

|

| 2 |

+

CLI package for lineagentic framework.

|

| 3 |

+

"""

|

| 4 |

+

|

| 5 |

+

__version__ = "0.1.0"

|

cli/main.py

ADDED

|

@@ -0,0 +1,238 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

#!/usr/bin/env python3

|

| 2 |

+

"""

|

| 3 |

+

Main CLI entry point for lineagentic framework.

|

| 4 |

+

"""

|

| 5 |

+

|

| 6 |

+

import asyncio

|

| 7 |

+

import argparse

|

| 8 |

+

import sys

|

| 9 |

+

import os

|

| 10 |

+

import logging

|

| 11 |

+

from pathlib import Path

|

| 12 |

+

|

| 13 |

+

# Add the project root to the Python path

|

| 14 |

+

project_root = Path(__file__).parent.parent

|

| 15 |

+

sys.path.insert(0, str(project_root))

|

| 16 |

+

|

| 17 |

+

from lf_algorithm.framework_agent import FrameworkAgent

|

| 18 |

+

|

| 19 |

+

|

| 20 |

+

def configure_logging(verbose: bool = False, quiet: bool = False):

|

| 21 |

+

"""Configure logging for the CLI application."""

|

| 22 |

+

if quiet:

|

| 23 |

+

# Quiet mode: only show errors

|

| 24 |

+

logging.basicConfig(

|

| 25 |

+

level=logging.ERROR,

|

| 26 |

+

format='%(levelname)s: %(message)s'

|

| 27 |

+

)

|

| 28 |

+

elif verbose:

|

| 29 |

+

# Verbose mode: show all logs with detailed format

|

| 30 |

+

logging.basicConfig(

|

| 31 |

+

level=logging.INFO,

|

| 32 |

+

format='%(asctime)s - %(name)s - %(levelname)s - %(message)s',

|

| 33 |

+

datefmt='%Y-%m-%d %H:%M:%S'

|

| 34 |

+

)

|

| 35 |

+

else:

|

| 36 |

+

# Normal mode: show only important logs with clean format

|

| 37 |

+

logging.basicConfig(

|

| 38 |

+

level=logging.WARNING, # Only show warnings and errors by default

|

| 39 |

+

format='%(levelname)s: %(message)s'

|

| 40 |

+

)

|

| 41 |

+

|

| 42 |

+

# Set specific loggers to INFO level for better user experience

|

| 43 |

+

logging.getLogger('lf_algorithm').setLevel(logging.INFO)

|

| 44 |

+

logging.getLogger('lf_algorithm.framework_agent').setLevel(logging.INFO)

|

| 45 |

+

logging.getLogger('lf_algorithm.agent_manager').setLevel(logging.INFO)

|

| 46 |

+

|

| 47 |

+

# Suppress noisy server logs from MCP tools

|

| 48 |

+

logging.getLogger('mcp').setLevel(logging.WARNING)

|

| 49 |

+

logging.getLogger('agents.mcp').setLevel(logging.WARNING)

|

| 50 |

+

logging.getLogger('agents.mcp.server').setLevel(logging.WARNING)

|

| 51 |

+

logging.getLogger('agents.mcp.server.stdio').setLevel(logging.WARNING)

|

| 52 |

+

logging.getLogger('agents.mcp.server.stdio.stdio').setLevel(logging.WARNING)

|

| 53 |

+

|

| 54 |

+

# Suppress MCP library logs specifically

|

| 55 |

+

logging.getLogger('mcp.server').setLevel(logging.WARNING)

|

| 56 |

+

logging.getLogger('mcp.server.fastmcp').setLevel(logging.WARNING)

|

| 57 |

+

logging.getLogger('mcp.server.stdio').setLevel(logging.WARNING)

|

| 58 |

+

|

| 59 |

+

# Suppress any logger that contains 'server' in the name

|

| 60 |

+

for logger_name in logging.root.manager.loggerDict:

|

| 61 |

+

if 'server' in logger_name.lower():

|

| 62 |

+

logging.getLogger(logger_name).setLevel(logging.WARNING)

|

| 63 |

+

|

| 64 |

+

# Additional MCP-specific suppressions

|

| 65 |

+

logging.getLogger('mcp.server.stdio.stdio').setLevel(logging.WARNING)

|

| 66 |

+

logging.getLogger('mcp.server.stdio.stdio.stdio').setLevel(logging.WARNING)

|

| 67 |

+

|

| 68 |

+

def create_parser():

|

| 69 |

+

"""Create and configure the argument parser."""

|

| 70 |

+

parser = argparse.ArgumentParser(

|

| 71 |

+

description="Lineagentic - Agentic approach for code analysis and lineage extraction",

|

| 72 |

+

formatter_class=argparse.RawDescriptionHelpFormatter,

|

| 73 |

+

epilog="""

|

| 74 |

+

Examples:

|

| 75 |

+

|

| 76 |

+

lineagentic analyze --agent-name sql-lineage-agent --query "SELECT a,b FROM table1"

|

| 77 |

+

lineagentic analyze --agent-name python-lineage-agent --query-file "my_script.py"

|

| 78 |

+

"""

|

| 79 |

+

)

|

| 80 |

+

|

| 81 |

+

# Create subparsers for the two main operations

|

| 82 |

+

subparsers = parser.add_subparsers(dest='command', help='Available commands')

|

| 83 |

+

|

| 84 |

+

# Analyze query subparser

|

| 85 |

+

analyze_parser = subparsers.add_parser('analyze', help='Analyze code or query for lineage information')

|

| 86 |

+

analyze_parser.add_argument(

|

| 87 |

+

"--agent-name",

|

| 88 |

+

type=str,

|

| 89 |

+

default="sql",

|

| 90 |

+

help="Name of the agent to use (e.g., sql, airflow, spark, python, java) (default: sql)"

|

| 91 |

+

)

|

| 92 |

+

analyze_parser.add_argument(

|

| 93 |

+

"--model-name",

|

| 94 |

+

type=str,

|

| 95 |

+

default="gpt-4o-mini",

|

| 96 |

+

help="Model to use for the agents (default: gpt-4o-mini)"

|

| 97 |

+

)

|

| 98 |

+

analyze_parser.add_argument(

|

| 99 |

+

"--query",

|

| 100 |

+

type=str,

|

| 101 |

+

help="Code or query to analyze"

|

| 102 |

+

)

|

| 103 |

+

analyze_parser.add_argument(

|

| 104 |

+

"--query-file",

|

| 105 |

+

type=str,

|

| 106 |

+

help="Path to file containing the query/code to analyze"

|

| 107 |

+

)

|

| 108 |

+

|

| 109 |

+

# Common output options

|

| 110 |

+

analyze_parser.add_argument(

|

| 111 |

+

"--output",

|

| 112 |

+

type=str,

|

| 113 |

+

help="Output file path for results (JSON format)"

|

| 114 |

+

)

|

| 115 |

+

analyze_parser.add_argument(

|

| 116 |

+

"--pretty",

|

| 117 |

+

action="store_true",

|

| 118 |

+

help="Pretty print the output"

|

| 119 |